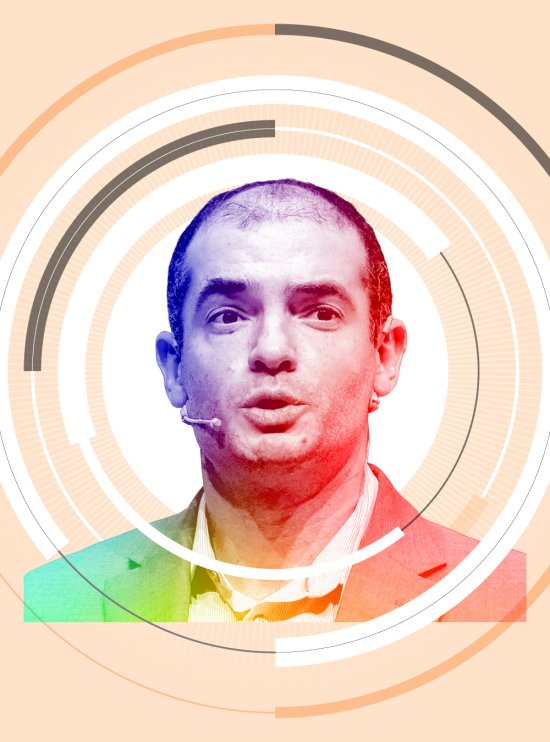

OpenAI’s former chief scientist has had a tumultuous year. Ilya Sutskever, once widely regarded as perhaps the most brilliant mind at OpenAI, voted in his capacity as a board member last November to remove Sam Altman as CEO. The move was unsuccessful, in part because Sutskever reportedly bowed to pressure from his colleagues and reversed his vote. After those fateful events, Sutskever disappeared from OpenAI’s offices so noticeably that memes began circulating online asking what had happened to him. Finally, in May, Sutskever announced he had stepped down from the company.

[time-brightcove not-tgx=”true”]On X (formerly Twitter), Sutskever praised OpenAI’s leadership and said he believed the company would build AGI safely. But his departure came at the same time as several other safety-focused staff left with more pessimistic public statements about OpenAI’s safety culture, which seemed to underline the impression that something fundamental had shifted at the company. The Superalignment team that Sutskever co-ran, which was aimed at developing methods to make advanced AIs controllable so they don’t wipe out humanity, had been promised 20% of OpenAI’s computing power to do its work. But the team sometimes struggled to access that compute and was increasingly “sailing against the wind,” Sutskever’s co-lead Jan Leike said in a series of messages announcing his own departure, in which he criticized the safety culture at OpenAI as having “taken a backseat” to building new “shiny products.”

In June, Sutskever announced he was starting a new AI company, called Safe Superintelligence, which aims to build advanced AI outside of the market, to avoid becoming “stuck in a competitive rat race.” The implication that OpenAI is stuck in such a rat race is the most critical Sutskever has been of his former employer in public.

Safe Superintelligence is at least the third AI company—after OpenAI and Anthropic—to be founded by industry insiders on the belief that they could build superintelligent AI more safely than their irresponsible competitors. But so far at least, with each new entrant, the race has only accelerated. Sutskever declined a request to be interviewed for this story via a spokesperson, who said he was in “what he describes as Monk Mode—heads down in the lab.”

Buy your copy of the TIME100 AI issue here

*Disclosure: OpenAI and TIME have a licensing and technology agreement that allows OpenAI to access TIME’s archives.